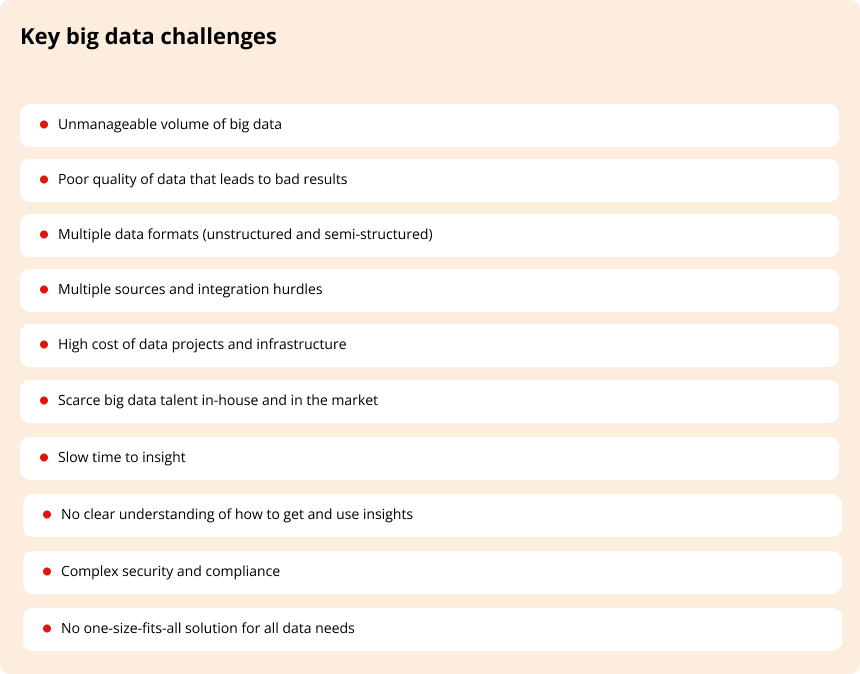

10 Big Data Challenges and Solutions to Tackle Them

Can’t use your data in full? You are probably dealing with some of the major big data challenges that prevent you from gaining all the advantages of this valuable resource. And you are not alone.

97% of organizations invest in data initiatives, but only a quarter of them report that they have reached the level of an insight-driven organization, according to the 2022 NewVantage survey.

Knowing your enemy is the first step to winning the battle, right? So let’s review the key big data problems and solutions that can help you tackle them. But first, a quick recap of what we count as big data and a few big data examples and business cases to warm up.

Big data refers to the large exponentially growing volume of data that often exists in various formats within the organization and comes from different sources. In other words, it is massive, multiform, and all over the place. These characteristics explain most of the big data problems we will talk about in this post.

So an inventory spreadsheet for the previous year? Not big data. Warehouse management data that includes inventory files, employee performance records, facility usage data from smart lighting and power management system, location tracking, and heatmaps all together? That’s the one.

Big data has an enormous significance in the way organizations make decisions, design their products and run their businesses in nearly every industry.

For example, major retail brands like Nike use big data technologies to monitor consumer trends and reshape their product strategies and marketing campaigns. Disruptive brands like Tesla break the monopoly by building their entire business based on data. From self-driving cars to roof tiles, Tesla’s products largely rely on data at scale.

And every other leading brand in every other key industry from Maersk in shipping to Netflix in streaming is leveraging their sources to run operations effectively. However, despite all the investment and abundant tools in the market, only a fraction of companies actually manage to squeeze value from their data.

Only 17% of executives in manufacturing admit benefiting from their data analytics efforts, according to the 2022 Survey by BCG.

The main challenges of big data fall on technological, organizational, and operational constraints such as lack of skills or adequate infrastructure. Let’s break down these challenges into small easy-to-grasp problems and offer actionable solutions.

1. Unmanageable volume

Big data lives up to its name. Companies sit on terabytes and even exabytes of data that is continuously growing and can easily get out of hand if not managed properly. Without adequate architecture, computing power, and infrastructure in place, businesses can’t catch up with this growth and, as a result, miss the opportunity to extract value from their data assets.

43% of decision-makers believe their infrastructure can’t keep up with the growing demands of data in the future, according to Dell.

Solution

- Use management and storage technologies to address the ever-increasing volume and challenges of managing big data. Whether you choose cloud, on-premises hosting, or a hybrid approach, make sure your choice resonates with your business goals and organizational needs. For instance, on-premises won’t scale instantly – you need to physically increase your infrastructure and admin team – but it’s a good choice if you want to keep all your data to yourself. On the contrary, cloud solutions (public, private, or hybrid) provide you with incredible flexibility and resources to manage basically any data volume, especially if you don’t have enough computing power to deal with it in-house. But the cloud comes at a recurring cost.

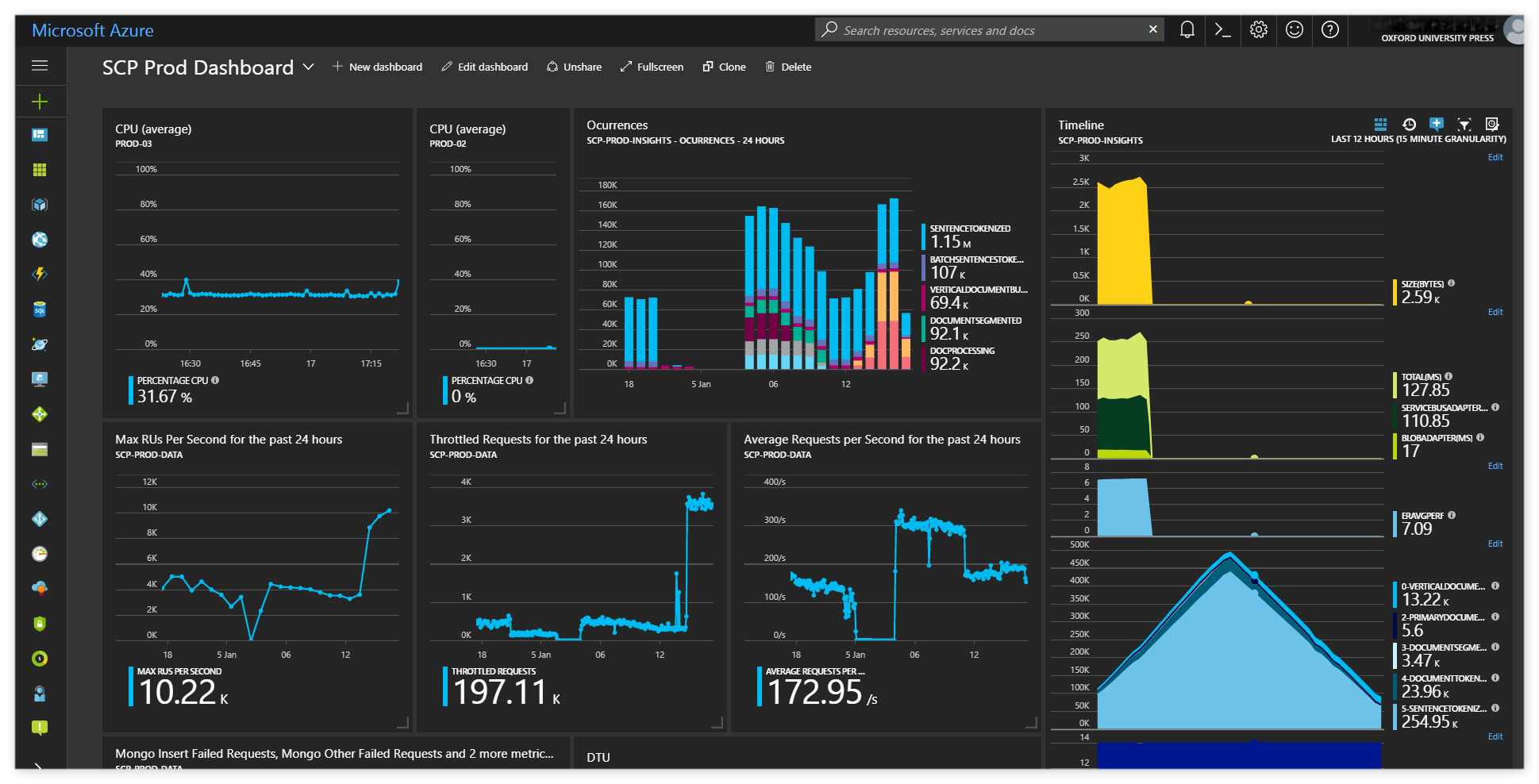

- Create a scalable architecture and tools that will be able to adjust to the growing volume of data without compromising its integrity. In the example below you can see how we used Azure Cosmos DB to create a scalable data processing system for Oxford University Press.

Example: Oxford University Press was dealing with big data issues to process the massive volume of lexical entries for building the English corpus effectively. As a tech partner since 2015, our team joined efforts with OUP and MS Azure teams to design a modular system for data collection, cleansing, filtering, and processing based on Cosmos DB and NLP toolkits. This cloud-based system has unlimited scalability and elasticity able to process all news articles in English produced daily throughout the entire Internet in only 3 hours and withstand 10x load. OUP can now process data 5 times more effectively than before and enjoy unlimited scalability.

2. Bad data that leads to bad results

Poor quality is one of the biggest challenges of big data that costs the US alone more than $3 trillion every year. How does so much money go down the drain? Poor quality of data leads to errors, inefficiency, and misleading insights, which in the end transform into business costs.

It can be small, for instance, when a company fails to match one customer with the right order due to an incorrect entry. Or it can be painfully big and cost millions, like the 2008 financial crisis which was a direct result of bad data.

What exactly is bad data then? Duplicate, outdated, missing, incorrect, unreadable, and inconsistent data may compromise the quality of the whole set. Even insignificant errors and inaccuracies lead to serious big data problems. This is why it’s so important to keep tabs on its quality. Otherwise, it may cause more damage than benefit.

Solution

- The first step towards good data hygiene is to have the process and people who take care of the data within the organization. You should establish adequate data governance that determines the tools and procedures for data management and access control.

- Set up an effective process for cleansing, filtering, sorting, enriching, and otherwise managing data using modern data management tools, which are plenty. If you work with one of the popular cloud solutions, you probably already have all the tools you need at hand.

- It’s essential to have a good understanding of how to sort and peel data based on your business objectives. So you may need to bring in business folks who will actually use this data to define requirements for data quality.

3. Deal with multiple formats

This may come as a shocker but most of the data we have (80-90% according to cio.com) is unstructured. If it doesn’t sit in a database table (emails, customer reviews, video, etc.) it’s probably unstructured or semi-structured. This brings us to another set of challenges with big data – figuring out how to bring heterogeneous data to a format that fits your business intelligence needs and, at the same time, matches the requirements of the tools you use down the pipeline for analytics, visualization, predictions, etc.

Solution

- Figure out how to use modern data processing technologies and tools to reformat unstructured data and derive insights from it. If you deal with multiple formats, you may have to combine different tools to parse data (e.g., text and image recognition engines based on machine learning) and extract the information you need.

- Adopt or create custom applications that help you speed up and automate the process of converting raw data into valuable insights. The choice will depend on the source and nature of your data and on the unique requirements of your business.

Example: A global agritech analytics company was struggling with inefficient manual processing of invoices that came in different formats, including paper, text, and non-standardized PDFs. They asked Digiteum to develop an MVP for automated document processing that would enable fast and cost-effective invoice management. After analyzing several tools for text recognition, the team designed an AWS-based tool with custom data extraction algorithms and open-source OCR that uses deep learning and computer vision for high-precision invoice processing. The MVP outran original goals for the quality of text recognition which allowed to automate invoice management process and reduce manual work by 80%.

4. Multiple sources and integration hurdles

The more data the better, right? Well, in many cases, more data doesn’t equal more value until you know how to put it together for joint analysis. Truth is, one of the most complex challenges for big data projects is to integrate diverse data and find or create touch points that lead to insights.

Which makes this challenge a twofer. First, you need to determine when it makes sense to put together data from different sources. For instance, if you want to get a 360-degree view of customer experience, you need to herd reviews, performance, sales, and other relevant data for joint analysis. And second, you then need to create a space and a toolkit for integrating and preparing this data for analytics.

Solution

- Make an inventory to understand what sources your data is coming from and if it makes sense to integrate it for joint analysis. This is in large part a business intelligence task since it’s the business folks who understand the context and decide what data they need to successfully reach their BI goals.

- Adopt data integration tools that can help you connect data from various resources such as files, applications, databases, and data warehouses and prepare it for big data analytics. Depending on the technologies you already use in your organization, you can leverage Microsoft, SAP, Oracle, or specialized tools focused on data integration like Precisely or Qlik.

5. High cost of data projects and infrastructure

50% of executives in the US and 39% of executives in Europe admit that a limited IT budget is one of the biggest barriers that stop them from capitalizing on their data. Big data implementation is expensive. It requires careful planning and involves significant upfront costs that may not pay off quickly.

Moreover, when the volume of data grows exponentially, so does the infrastructure. At some point, it may get too easy to lose sight of your assets and the cost of their management. In fact, up to 30% of the money spent on the cloud is wasted, according to Flexera.

Solution

- You can solve most of the growing cost issues with big data by continuously monitoring your infrastructure. Effective DevOps and DataOps practices help keep tabs on the services and resources you use to store and manage data, identify cost-saving opportunities and balance scalability expenses.

- Consider costs early on when creating your data processing pipeline. Do you have duplicate data in different silos that doubles your expenses? Can you tier your data based on business value to optimize its management costs? Do you have data archiving and forgetting practices? Answering these questions can help you create a sound strategy and save a ton of money.

- Select cost-effective instruments that fit your budget. Most cloud-based services are provided on a pay-as-you-go basis meaning that your expenses will depend directly on the services and computing power you use. And the landscape of big data solutions expands all the time allowing you to choose and combine different tools to match your budget and needs.

Example: Migrating from AWS warehouse services to cost-effective Databricks helped our client, one of the leading precision medicine players in the global market, significantly reduce the expenses of medical data management (50x) optimizing the cost of infrastructure for a large-scale big data project.

Turn data into your strategic advantage

Rid yourself of all big data concerns that prevent you from capitalizing on your data.

SHOW BIG DATA SERVICES6. Scarce big data talent in-house and in the market

The talent shortage is one of the hardest and most expensive big data problems to solve. For two reasons. First, it’s getting increasingly hard to find qualified tech talent for a project. The demand for data science specialists, engineers, and analysts already exceeds the offer. Secondly, the need for specialists is going to skyrocket in the nearest future as more companies invest in big data projects and compete for the best talent in the market.

There will be 11.5 million new data science jobs by 2026 according to the US Bureau of Labor Statistics.

Solution

- The simplest and perhaps the fastest way to solve a talent shortage problem is to partner with an experienced and reliable tech provider who can easily fill in the gap for your big data and BI needs. Outsourcing your project may also save you money if hiring in-house is over your budget.

- No one knows your data better than you and your team. Consider upskilling your current engineers to gain the necessary competence and then retain the talent in-house.

- Create analytics and visualization tools that are available for nontech specialists within your organization. Make it easy for your employees to get insights and incorporate them into the decision-making process.

Example: In 2020, a UK data analytics company partnered with Digiteum to speed up the development of a big data solution for diagnostic testing. The goal was to quickly screen and put together a dedicated team of senior data engineers, developers, DevOps and QA to build a scalable system in less than a year while staying on budget. The team launched the first version of the system in 8 months thanks to a quick team scale-up.

7. Slow time to insight

Time to insight refers to how quickly you can receive insights from your data before it gets old and obsolete. Slow time to insight is one of the challenges in big data that originates from cumbersome data pipelines and ineffective data management strategies.

This parameter is more critical in some business cases than in others. Compare, for instance, a consumer behavior analysis based on quarterly data and IoT real-time data analytics for equipment monitoring. The first one can tolerate days or even weeks of delay, while in the second case, even a small latency can turn into serious trouble.

Solution

- If you work on big data and IoT projects where low latency is one of the key requirements for automation and remote control, consider leveraging edge and fog technologies to bring analytics as close to action as possible. It will minimize time to insight and enable fast response to real-time data.

- Your data strategy isn’t set in stone. Use an agile approach when designing and building your data pipeline, and review it frequently to spot inefficiencies and slowdowns.

- Use modern artificial intelligence technologies and big data visualization techniques and tools to deliver and communicate insights faster.

8. No clear understanding of how to get and use insights

Extracting insights is one thing. Putting them to use is an entirely different story. If the second doesn’t work, your entire big data strategy may fall flat because it can’t bring any returns.

Solution

- Create a viable business case for your project and bring in business folks to better understand what they need to get from the data and how they can act on it.

- Use advanced analytics that helps discover new ways to read and understand insights and make them readily available for anyone in the organization.

- Provide modern visualization tools, dashboards, interactive experiences and intuitive interfaces to drill data, explore insights, create reports and communicate data within the organization.

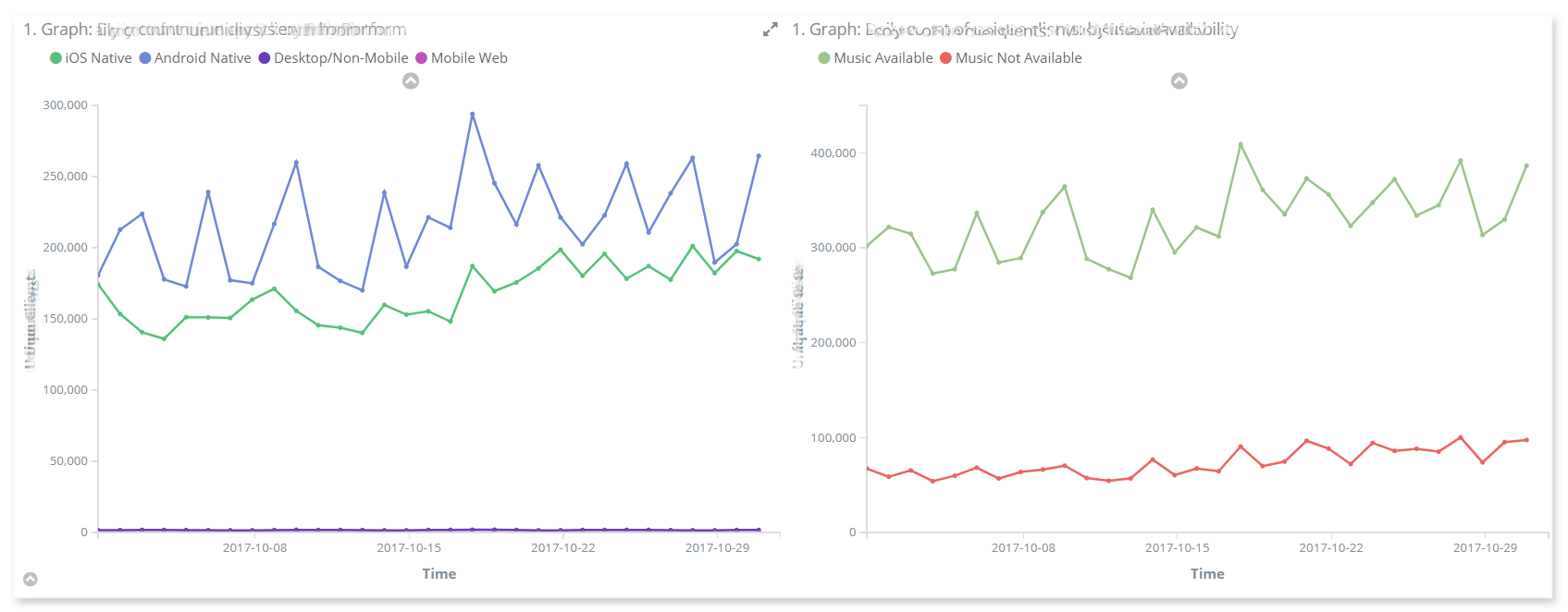

Example: When working with a B2B music streaming platform feed.fm, we used a combo of Elastic Stack, Apache Spark, and ML tools to create powerful yet easy-to-grasp and explore visualizations and analytics for real-time streaming data. Using these tools, feed.fm executives and the platform’s clients could easily access insights on consumer engagement and conversions anytime, anywhere, and use these insights to make important tactical decisions faster.

9. Security and compliance

More than a third of the big data budget is spent on compliance and defense, according to a NewVantage survey. It is not surprising given the increasing pressure that comes from strict privacy regulations and big data risks associated with security breaches. These risks get only bigger as the volume of data grows.

Solution

- Put the security of big data into original planning, strategy and design. Treating it as an afterthought is the worst idea that can lead to serious big data problems and millions in fines.

- Vet both your data and your sources against the compliance requirements that apply to your niche and your location, e.g., GDPR in the EU, HIPAA and HITECH Act for healthcare data in the US, etc.

10. No one-size-fits-all solution for all data needs

Last but not least big data problem is the lack of be-all and end-all for solving all the challenges listed above. Sure, there is a sizeable market of platforms, cloud suites, AI services, analytics, visualization, and dashboarding tools that can cover all your needs. Yet every single big data project that we ever worked on required a tailored approach to selecting the services and strategies to deliver actionable insights on time and on budget.

Solution

- Conduct a technology analysis to review available solutions and tools against your business objectives, goals, infrastructure, in-house skills, budget, and scalability requirements.

- Bring in an experienced big data service provider who has both tech expertise and development resource to select the right set of tools for your project, implement it and further maintain and optimize based on your changing needs.

Get rid of all the problems in big data and become an insight-driven business on your terms. We can help you adopt and build strategies, infrastructure and applications that turn raw data into business-critical insights. Without bloating your budget or creating unnecessary complexity within your organization.

- One tech provider for all your needs including engineering, analytics, visualization, infrastructure and big data management services.

- Proven track record of successful big data projects for Oxford University Press, Printique, Diaceutics, Oracle, feed.fm, Applixure, and dozens of companies in agtech, IoT, logistics, energy, retail, and AI.

- In-house senior experts in cloud development, data infrastructure, management, and integration (Microsoft Azure, AWS, Google Cloud, Apache Hadoop, Apache Kafka, Databricks, Elasticsearch, Snowflake)

- Rich expertise in major data analytics and visualization tools and dashboarding, including ELK, Prometheus, New Relic, Grafana, etc.

- Hands-on experience in IoT application development and streaming data management.

Hire experts to handle your most valuable resource

Solve your most pressing big data needs fast and cost-effectively by engaging top-tier engineers, architects, and analysts who know your niche.

HIRE BIG DATA EXPERTSQuick round-up

- Every company that invests in big data has to solve at some point the common big data challenges, such as scalability or lack of adequate infrastructure and expertise.

- If you deal with any of these problems within your organization, consider the actionable steps we suggest in the post that can help you tackle major big data challenges and opportunities.